On the early stage of Machine Learning, Data Mining progress, one of the problems we have to deal with processing large-size file, including corpus shuffle, usually its size would be larger than our limited resources like memory or capacity. Let’s say, the file is 30GB, whereas the provided memory is 8GB or 16GB, we surely cannot load entire them to memory in term of resource shuffling distribution notion. Therefore, the strategic tends to serve that case called Lazy Shuffling Algorithm allowing us to generally trade off time and capacity for memory allocation.

Shuffle Algorithm

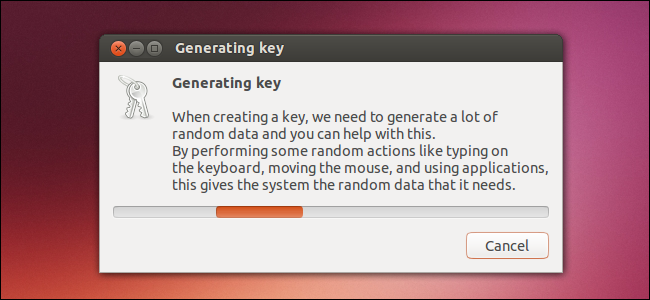

In Computer Science, mixing dataset randomly required unpredictable randomness method, that pure randomness leads the data to be more natural sense. Due to the fact that computer only creates a true random number indeed an external event from real world, by other words, it measures some type of physical phenomenon that takes place outside of the computer like keyboard typing, mouse moving, temporature, treated as environment seeds to make sure generated numbers cannot be reproduced by some kind of reverse engineering.

Actually, the true data shuffling with high and perfect expectation could be somewhere fulfill by pseudorandom generator, these random numbers completely created by algorithm and seed value, instead of external event factors are also reluctantly acceptable, since sequence results are closer to truly random in several proven experiments. From there, Fisher Yates shuffle algorithm, leverages the perspective of pseudorandom generator approach, says

-- To shuffle an array a of n elements (indices 0..n-1):

for i from n−1 downto 1 do

j ← random integer such that 0 ≤ j ≤ i

exchange a[j] and a[i]Wiki: https://en.wikipedia.org/wiki/Fisher%E2%80%93Yates_shuffle

The algorithm inspired by pseudorandom generator approach to randomly permutate in-memory swapping throughout entire dataset, thus, the in-place update does not incurred new dataset, of course no more spaces required before and after executing. But when if memory issue is a big deal, which would become puzzled to apply.

Lazy Shuffle Algorithm

Considering space is adjustable and time could be as fast as possible excepts memory is finite, we are not able to load whole large data based on FY Shuffle, then we have to improve it as Lazy Shuffle Algorithm, working around by shuffling index first, seeking and assigning new shuffled data later. Below algorithm serves the line by line format, if the file is a binary, we will have same approach but more complicated on binary serial structure.

- Load line by line and save the cursor offset, aka line map on each line into the offset_list. For example line 1 ends at 30, line 2 ends at 60 and so on, the offset_list should be

offset_list = [0, 30, 60, .... 40] - Create the index_list from range 0 to length of the offset_list, then the line 1 marked as index 0, line 2 marked as index 1,… The index_list must be

index_list = [0, 1, 2, 3, 4...., n] - Conduct shuffling index_list, in view of the fact that index_list contains only those indices, so it will not too much large resource basically. The sequence results would be also index_list object (because of in-place swapping) likely as

index_list = [4504, 134, 27676, 364756, 4...., 43] - Looping through shuffled index_list, we will leverage the offset_list to quickly access to the line, for example,

index_list[0] = 4504andoffset_list[4504] = 490478, seek file cursorfbyf.seek(490478)andnew_line = f.readline(), then appended writesnew_linesequetially in a new file instead of in-place swapping.

Python implementation

I have prepared the 5.6GB file aggregated by binhvq, uncompressed ~27.5GB:

https://github.com/binhvq/news-corpus#full-txt-v2

To easier demo, I will use colab for the whole algorithm, firsly, download the file and wait for completion.

!pip install --upgrade --no-cache-dir gdown

!gdown --no-cookies --id 1GFbe-qs6HmCYs0JwJgivOy2Bvb06M8OI

# !gdown --id 1GFbe-qs6HmCYs0JwJgivOy2Bvb06M8OI => Not work

!7z e corpus-full-20181217.7zIt will take ~10 min to finish process, so let move to next section

To seek the file partially, the example here

class LineSeekableFile:

def __init__(self, seekable):

self.fin = seekable

self.line_map = list() # Map from line index -> file position.

self.line_map.append(0)

while seekable.readline():

self.line_map.append(seekable.tell())

def __getitem__(self, index):

# NOTE: This assumes that you're not reading the file sequentially. For that, just use 'for line in file'.

self.fin.seek(self.line_map[index])

return self.fin.readline()Source: https://gist.github.com/JosephCatrambone/25476e9a37e932e50c527fc41e164e07

I modify a bit the class LineSeekableFile, on the first time initialization, the line will be loop entire the file, but only keep line positions to line_map aka offset_list, I set 10 lines read to easier testing

class LineSeekableFile:

\tdef __init__(self, f):

self.fin = f

# create the line map starting by 0

self.line_map = [0]

self.line_count = 0

file_pos = 0

for line in f:

file_pos += len(line)

self.line_map.append(file_pos)

self.line_count += 1

# Break for testing purpose

if self.line_count == 10:

break

def __getitem__(self, index):

# NOTE: This assumes that you're not reading the file sequentially.

# For that, just use 'for line in file'.

self.fin.seek(self.line_map[index])

return self.fin.readline()The index_list created by line_count, so when it reads 10 lines, it will get 10 lines and shuffle in that scope

import random

with open('corpus-full-0.2.txt', 'r', encoding='utf8',errors="ignore") as f, open('new-corpus.txt', 'w', encoding='utf8',errors="ignore") as fw:

seeker = LineSeekableFile(f)

index_list = list(range(seeker.line_count))

# Fisher Shuffle

random.shuffle(index_list)

for i in range(seeker.line_count):

print(f'Row {i}, swap with {index_list[i]}')

print(f'Origin {i}, {seeker[i]}')

print(f'Shuffled {index_list[i]}, {seeker[index_list[i]]}')

print('-----')

fw.write(seeker[index_list[i]])After finish testing, you can remove the print message and the breaking line to see performance.

Full code here:

# For colab only

!pip install --upgrade --no-cache-dir gdown

!gdown --no-cookies --id 1GFbe-qs6HmCYs0JwJgivOy2Bvb06M8OI

!7z e corpus-full-20181217.7z

import random

class LineSeekableFile:

def __init__(self, f):

self.fin = f

# create the line map starting by 0

self.line_map = [0]

self.line_count = 0

file_pos = 0

for line in f:

file_pos += len(line)

self.line_map.append(file_pos)

self.line_count += 1

# Break for testing purpose

if self.line_count == 10:

break

def __getitem__(self, index):

# NOTE: This assumes that you're not reading the file sequentially.

# For that, just use 'for line in file'.

self.fin.seek(self.line_map[index])

return self.fin.readline()

with open('corpus-full-0.2.txt', 'r', encoding='utf8',errors="ignore") as f, open('new-corpus.txt', 'w', encoding='utf8',errors="ignore") as fw:

seeker = LineSeekableFile(f)

index_list = list(range(seeker.line_count))

random.shuffle(index_list)

for i in range(seeker.line_count):

print(f'Row {i}, swap with {index_list[i]}')

print(f'Origin {i}, {seeker[i]}')

print(f'Shuffled {index_list[i]}, {seeker[index_list[i]]}')

print('-----')

fw.write(seeker[index_list[i]])

For code running in colab, please reference here: https://colab.research.google.com/drive/1rRO5u_WpXYkRdq3lugW-ed2v0UH525G5?usp=sharing